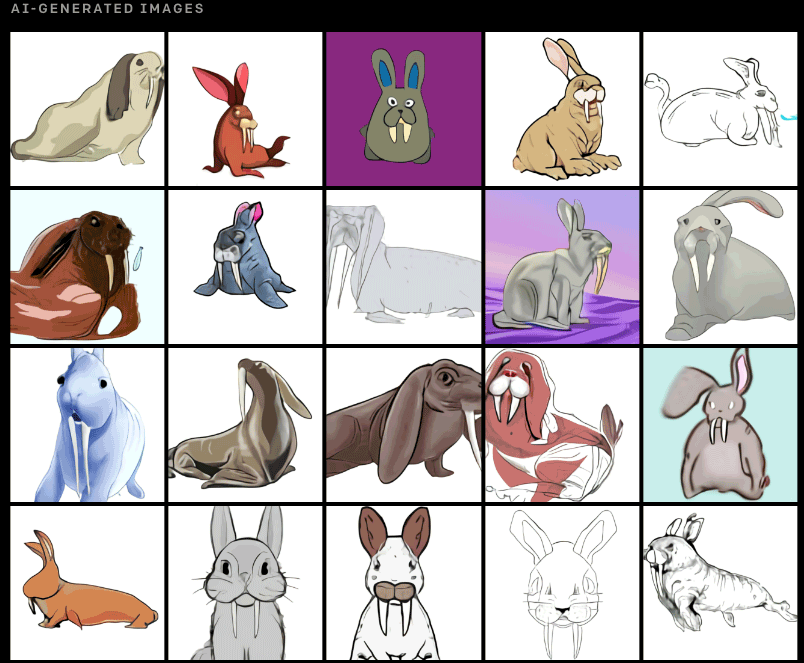

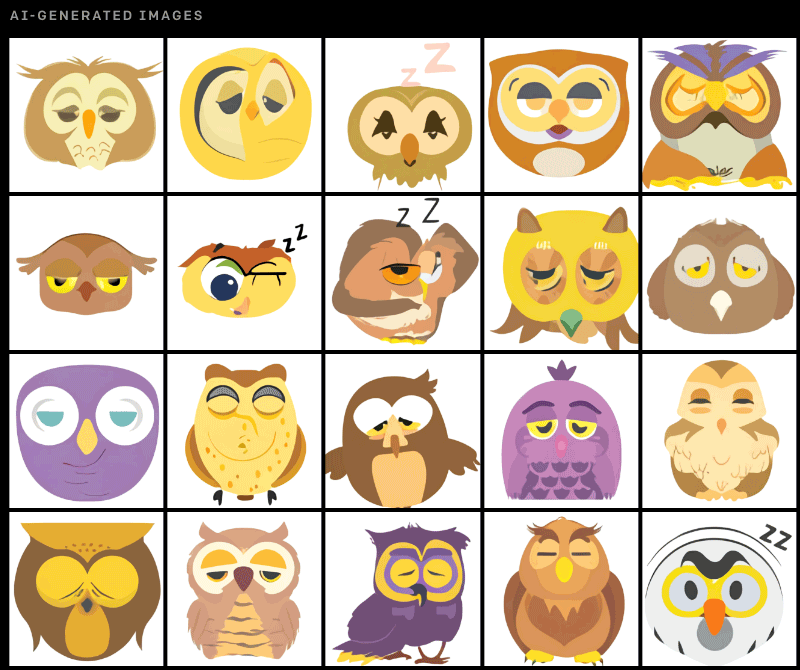

Yesterday OPENAI released a showcase of examples demonstrating image generation from text called DALL-E. The complexity of never seen examples is impressive. The quality of illustration and images makes you wonder where this will be used when anything you can think up, can be convincingly generated.

Art, design, fashion, or possibly just to deceive? Still I’d love to see more categories or variations. Possibly an Open API

The examples are uncanny and familiar while still being “unique” or new. The low effort to produce many satisfactory results will be enough for many, especially if the cost is low.

It’s easy to point to jobs or industry that this could one day replace however I see more people working with machine learning. Human preference should steer results. A fleshy discriminator.

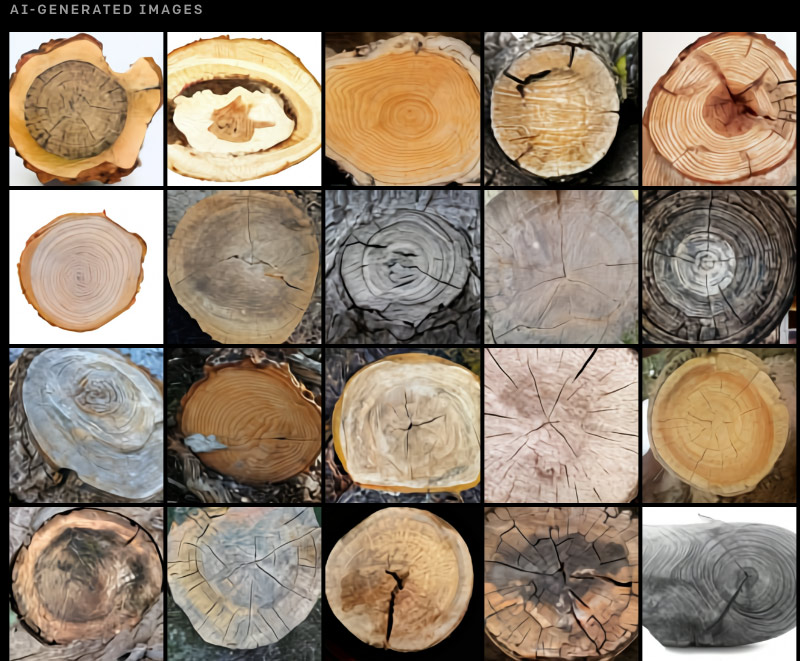

And just the day before another generative adversarial network, or GAN was shown called Taming transformers. This technique uses segmentation trained from landscape images to generate high resolution landscapes.

Cryptoart seems to be booming for well known generative artists at the moment and tools like these now eclipse some of the earliest GAN art sold at auction.

Previously other trained networks such as StyleGAN and BIGGAN peaked my interest in generating art with code. Neural networks for image generation have improved in leaps and bounds. Better UI to allow anyone to explore makes this really accessible and easy.

Exciting times.