My latest interest in microcontrollers has me learning all about Meshtastic mesh radio devices.

The Meshtastic website puts it concisely – “An open source, off-grid, decentralized, mesh network built to run on affordable, low-power devices”.

A lot to unpack right there but definitely within my Venn diagram of interests. As I dig in to this niche community of RF enthusists I realize there is almost limtless applications, reason for use, and areas to expand or learn about.

Essentially these are text-based walkie-talkies operating on an open band of radio frequency that go much farther than WiFi.

They bounce or “hop” off other similar nearby devices on the same channel, each called a “Node” to travel great distances in some cases.

If you have two nodes, and they are close enough to communicate, you can send text messages or other string data between them.

Configured correctly that message can be encrypted or you can choose to chat on the public default channel. The Citizens Band Radio – “Breaker Breaker 1-9”.

Some nodes have screens, GPS or other sensors but much of Meshtastic can be used or configured through a phone app UI.

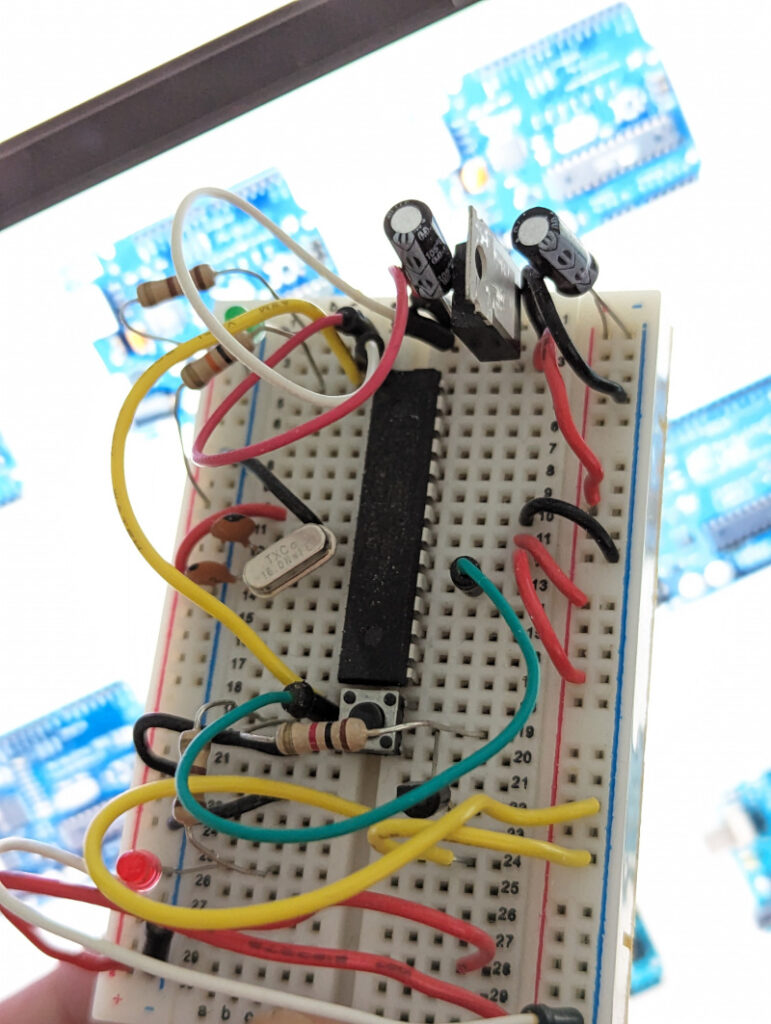

I have used LoRa, or Long-Range radios on microcontrollers in the past but that involved sending and parsing raw packets with the RFM95 series of radios. I enjoyed seeing just how far I could transmit and recieve data. (Not that far)

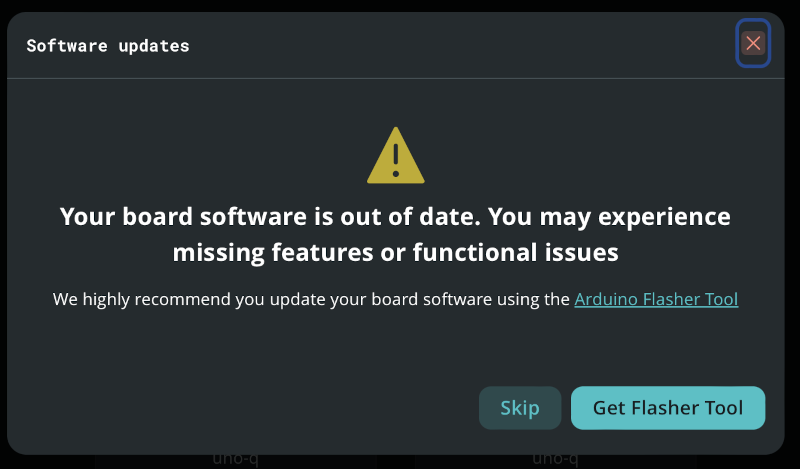

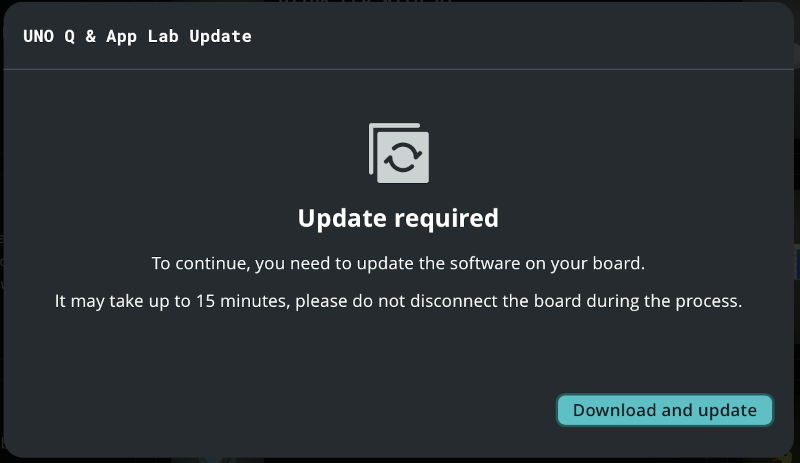

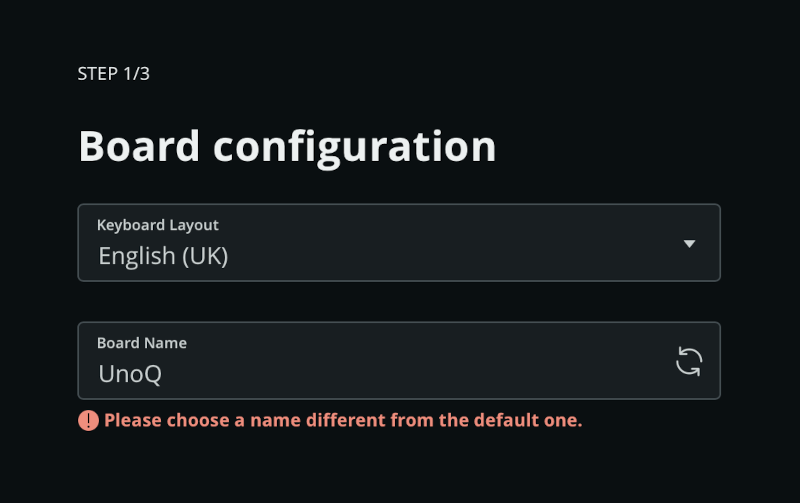

Meshtastic is messy but moving quickly. Old documentation or tutorials online might not reflect the latest UI or experience. These radios are not inherently reliable and there are many variations.

Your experience will be directly related to your device’s functions, app version, the number of Meshtastic nodes in your area, distance, and line of sight to another node.

I started in the mountains with no other users. But I had two radios so I could ping each other and get an good understanding of how it all works. Occasionally another node will show up on weekends when tourists are traveling into town.

When I travelled into Vancouver, driving with default notifications on Android, I learned that “New Node Seen” notifications were a bad idea.

Not enough nodes or too many nodes, each has it’s own issue. Dense populations such as Vancouver have switched modem settings from the default to get better experiences.

On top of the radio hardware and phone app there is also an optional connection to the internet using MQTT. Maps, dashboards, and every other conceivable web based project has used MQTT to connect the data from the radio network such as location, radio type etc.

Antenna design, 3D printed enclosures, solar and battery setup, different sensors or microcontrollers… You can see that there is plenty for gear-heads, hams, EE’s, and software developers.

The Meshtastic Discord is super-busy with lots of info flying. Good to lurk and look back to see related issues and discussions.

Recent changes mean less data or telemetry being pushed out from each radio by default so you might not see any others right away. Given some time you will eventually see other nodes if they are in range.

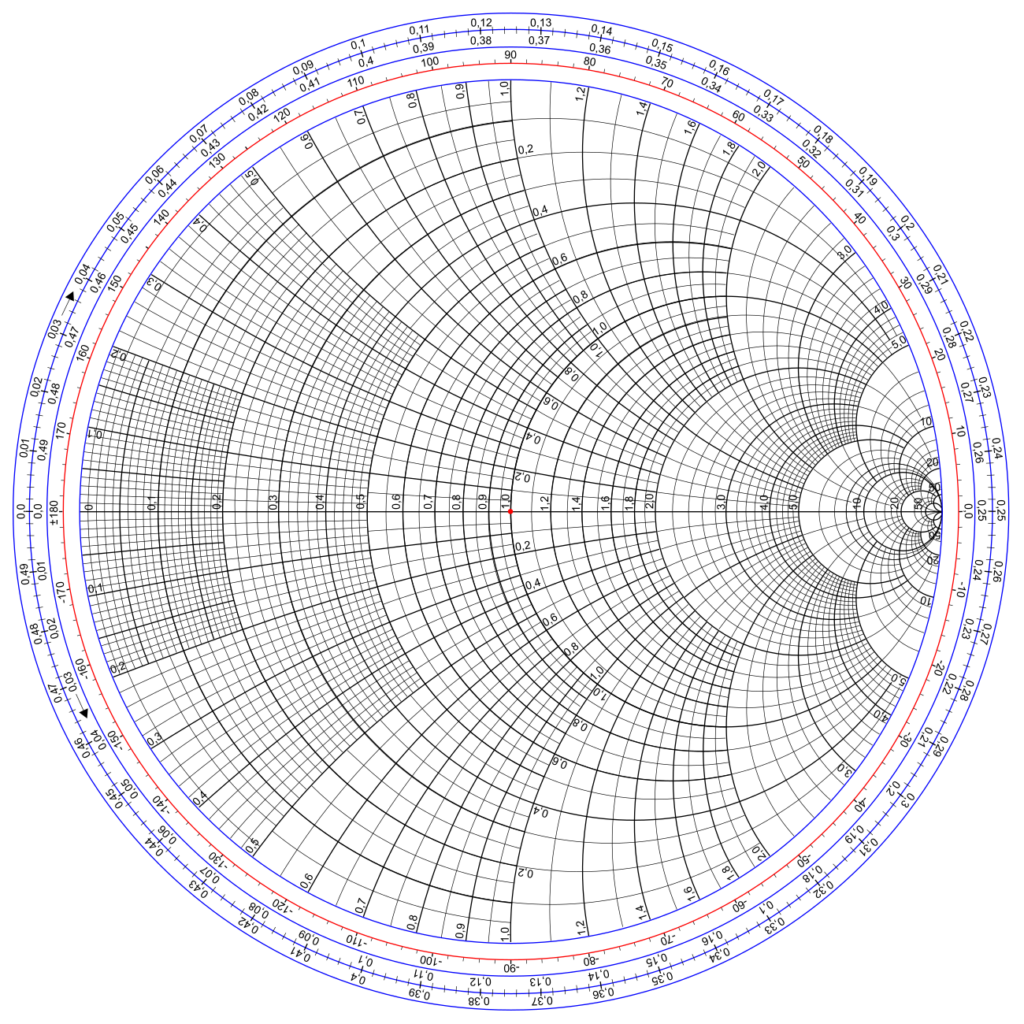

With the use of SDR, or Software Defined Radio, you can also view this radio communication in real time. In North American this is in the open 915MHz frequency.

Each country has it’s own rules so be sure to check local regulations.

If interested, make sure to buy two devices to start.

10-4 good buddy – OVER